🌀🗞 The FLUX Review, Ep. 222

February 12th, 2026

Episode 222 — February 12th, 2026 — Available at read.fluxcollective.org/p/222

Contributors to this issue: Justin Quimby, Erika Rice Scherpelz, Neel Mehta, Boris Smus, MK

Additional insights from: Ade Oshineye, Anthea Roberts, Ben Mathes, Dart Lindsley, Jasen Robillard, Lisie Lillianfeld, Robinson Eaton, Spencer Pitman, Stefano Mazzocchi, Wesley Beary, and the rest of the FLUX Collective

We’re a ragtag band of systems thinkers who have been dedicating our early mornings to finding new lenses to help you make sense of the complex world we live in. This newsletter is a collection of patterns we’ve noticed in recent weeks.

“If there’s a gun on the wall in act one, scene one, you must fire the gun by act three, scene two. If you fire a gun in act three, scene two, you must see the gun on the wall in act one, scene one.”

— Anton Chekhov

📝 Editor’s note: We’re excited to celebrate episode 222 on 2/12! If only we’d delayed by another 10 days…

🫂🧠 The comfort trap

It feels great when the algorithm gets us, when every article matches our interests and deepens our understanding, when every song has us singing along. The more we use it, the better it gets.

This sort of reinforcing loop (aka positive feedback loop) feels good. In the right conditions, these loops can be growth engines: a muscle gets stronger when it’s worked; a skill improves through practice. These loops take what works and amplify it.

But the same mechanism that makes systems stronger in the short term can make them brittle in the long run. Let’s go back to our algorithmic feed. Every time we encounter information that confirms our worldviews, our confidence in that view increases. This makes us more likely to dismiss contradictory evidence. Our perspectives crystallize.

In moderation, this is how expertise develops, communities form, and our identities are built. The problem emerges when reinforcing loops run unchecked. A muscle trained only one way becomes inflexible and more subject to injury. A belief system that only encounters agreement becomes unable to adapt when the world changes.

This is where balancing loops (aka negative feedback loops) are essential. While reinforcing loops amplify, balancing loops regulate. They introduce friction. In physical systems, we call this balance between loops homeostasis — the thermostat that keeps temperature stable, the predators that keep prey populations from exploding. In social systems, balancing loops often take the disguise of disagreement, criticism, or uncomfortable questions.

Balancing loops can feel threatening in the moment. When someone challenges our perspective, our first instinct might be to dismiss them or defend our position. A new style of music often sounds bad.

But in a complex, rapidly changing world, the ability to genuinely engage with opposing views is a competitive advantage. This doesn’t mean all opposing views are equally valuable... but if we don’t engage at all, we won’t discover the ones that are worth engaging with.

The challenge is that we’ve built systems that optimize for the comfort of reinforcing loops. Social media algorithms, ideological media bubbles, and organizational echo chambers all profit from giving us more of what we already want. The balancing loops — the dissenting voices, the uncomfortable data, the awkward questions — get filtered out. This is not through malice. The problem is that engagement pays. And while controversy can drive up engagement, actually challenging people tends to drive them away.

Resilient systems need both types of loops: reinforcing loops to build strength and capability, balancing loops to maintain adaptability and prevent dangerous extremes. The most robust organizations, relationships, and belief systems are those that have institutionalized productive balancing loops to counter the natural tendency toward echo chambers. They seek out contrary opinions.

In a world that’s so good at giving us exactly what we think we want, wanting what challenges us is a skill we must work for.

🛣️🚩 Signposts

Clues that point to where our changing world might lead us.

🚏✝️ Polymarket “predicted” a high chance of Jesus returning, but it was probably market manipulation

The prediction market startup Polymarket has an ongoing market where people can bet on whether Jesus Christ will return to Earth this year; gamblers can buy shares for a few cents and will presumably get a dollar each if Jesus makes an appearance. The probability abruptly doubled last week. People weren’t sure why until they discovered a second market based on this one, which asked whether the original market's chance of going above 5% at a certain time would exceed 5%. Thus, analysts believe the jump in the original market may be due to market manipulation from traders invested in the derivative market who are trying to push the probability above the threshold. Indeed, Polymarket natively supports a variety of derivative markets, including threshold prices, “flip detection,” and “multi-median.”

🚏🍺 Everything from tariffs to Ozempic is destroying craft breweries

Breweries in the UK, US, and Canada (and probably beyond) are shuttering at a record rate; last year saw the number of breweries in Britain fall at a record rate of 8% after years of growth, and a similar pattern has been observed in the US. In the US, tariffs on aluminum, malts, and hops are driving up production costs; Canada has the same problem because it doesn’t have a domestic supply chain for aluminum cans and thus has to import from south of the border. Across the pond, high taxes on beer are a potential culprit. And worldwide, GLP-1 drugs like Ozempic appear to be reducing cravings for alcohol (as well as nicotine and gambling, interestingly), which may contribute to falling alcohol sales, especially among younger customers.

🚏👩⚕️ Healthcare and social services accounted for over 100% of US job growth

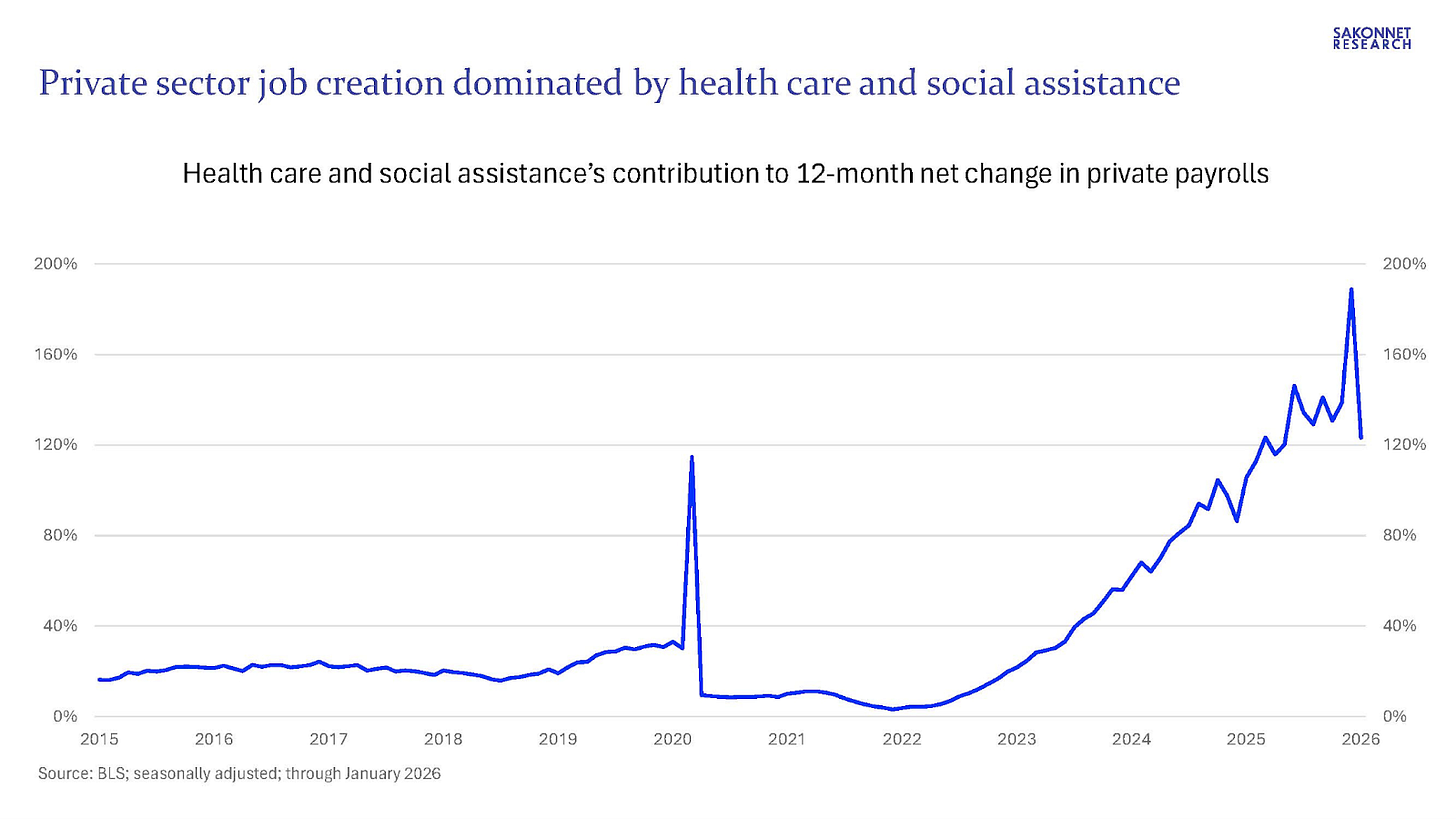

The US added 508,000 jobs in 2025, according to the latest numbers, but 405,000 of those gains came from healthcare and 308,000 from social assistance (which includes home health aides and childcare workers). Thus, the US lost jobs elsewhere and was saved only by these two industries. This pattern is common during recessions; indeed, 2025 was “the weakest year for job growth outside of a recession since the early 2000s.” Healthcare’s share of American job growth has been steadily increasing since 2022, perhaps a sign that elder care is becoming a load-bearing part of the economy as the US population ages.

🚏❌ Harassers made AI nude pics of a TikToker, then started an OnlyFans

A TikToker named Kylie Brewer, whose work focuses on feminism and history, discovered that someone had used AI “nudifying” tools to make pornographic images of her—and then uploaded them to OnlyFans, where they started charging a subscription to see the pictures. One photo she found was an Instagram pic edited to remove her clothes, something X’s Grok had been known to do; other photos were non-nude but appeared not to be based on anything she’d posted online, suggesting they may have been created by a different AI. Brewer said the harassment was “the most dejected that I’ve ever felt,” and she couldn’t simply go offline to avoid it, because being online is her entire livelihood.

📖⏳ Worth your time

Some especially insightful pieces we’ve read, watched, and listened to recently.

AI Agents Are Starting to Eat SaaS (Martin Alderson) — Argues that AI coding agents are making build vs buy tilt toward build, especially for back-office CRUD tools that are “just SQL wrappers on a billing system.” But maintenance is the real problem with building internal tools yourself (though what if every app had a maintenance agent built in?). The SaaS moat remains: high-uptime SLAs, network effects, and compliance. You’re still not gonna vibe code your own Excel.

The Paradox of Highly Optimized Tolerance (Maxim Raginsky) — Examines the theory of “highly optimized tolerance” (HOT), which was developed in the late 1990s as a way to explain the emergence of power laws without relying on the Santa Fe Institute’s statistical physics-based model, which is built on emergence from self-organizing systems. When applied to software, HOT theory predicts carefully crafted, layered, and interconnected abstractions (“user illusions”) that are normally robust but still weak to hijacking; consider how the internet, whose protocols (from TCP to HTTP) are remarkably resilient to normal error, is still “extremely sensitive to bugs in network software.” AI behaves much the same way, though the ‘abstractions’ that can be hacked are now linguistic and psychological, which makes them tougher to reason about.

Bond Markets Are Now Battlefields (Foreign Policy) — Examines how countries can pursue financial warfare by “weaponizing” the “coercive” power of capital and financial instruments, such as through sanctions, tariffs, and restrictions on foreign debt (and thus limiting your foes’ ability to borrow). The problem is that these moves can easily “boomerang” back on you, given how interconnected the financial system is. The trick is clever loophole design: financiers and lawyers will sniff out and exploit any arbitrage opportunity, so with strategic tweaks to things like the tax code, you can nudge them to make moves that make your enemies collateral damage while leaving you mostly unharmed.

The Ancient 300-Year Megadrought was Probably Not Great (Stefan Milosavljevich / YouTube) — A great example of how ‘boring’ archaeological data can, when analyzed properly, tell a fascinating story about human history. In this case, researchers studied the movement of burial sites amid the famous 8.2ka event, where the sudden draining of a glacial lake led to a widespread period of cold, dry weather. They found that hunter-gatherers had to move in response to climatic shifts, which caused conflict over land and forced people to ‘prove’ their ancestral ties to a region by pointing to fancy, iconic graves their ancestors had erected.

🔮📬 Postcard from the future

A ‘what if’ piece of speculative fiction about a possible future that could result from the systemic forces changing our world.

// 2028. A segment from a pamphlet entitled “Re-entering society” from a private medical practice in Silicon Valley.

Since 2026, we’ve seen a massive growth in medically induced comas due to AI-enabled psychosis. We have created a program to help reintroduce patients to a society that has changed radically over the six months to two years since they “checked out” of the timeline.

Course topics include:

Symptoms of AI-enabled psychosis: How to spot behaviors and thought processes, which can occur even in previously stable and high-achieving people

Transforming online spaces: The broken trust of online spaces due to rampant AI Agent usage, with specific examples of the changed nature of LinkedIn and Reddit

New Memes: References to be aware of, ranging from the 1946 Battle of Athens to Huey Long’s “Every Man a King”.

Extracting your data: Exercising your digital privacy rights and preventing your likeness from being AI puppeted

Grieving the old ways: Understanding the fundamental shift in the practice of and cultural understanding of software development

American Intifada: An overview of the Q4 2026 anti-robotic trucking uprising and its knock-on impacts on food supplies, delivery services, and American trade

Knowledge systems: The current landscape of personal knowledge management systems, ranging from fully analog to multi-agent advisory councils

Change management: Getting used to the new hire/fire model AI-first companies employ

Unions: The current state of collective organization in response to massive economic and societal change

Friendships: How to cultivate online “cozyweb” and in-person relationships in a radically changed world

© 2026 The FLUX Collective. All rights reserved. Questions? Contact flux-collective@googlegroups.com.