🌀🗞 The FLUX Review, Ep. 196

June 26th, 2025

Episode 196 — June 26th, 2025 — Available at read.fluxcollective.org/p/196

Contributors to this issue: Ade Oshineye, MK, Erika Rice Scherpelz, Neel Mehta, Boris Smus, Jasen Robillard, Justin Quimby

Additional insights from: Alex Komoroske, Ben Mathes, Chris Butler, Dart Lindsley,Dimitri Glazkov, Jon Lebensold, Julka Almquist, Kamran Hakiman, Lisie Lillianfeld, Melanie Kahl, Robinson Eaton, Samuel Arbesman, Scott Schaffter, Spencer Pitman, Wesley Beary

We’re a ragtag band of systems thinkers who have been dedicating our early mornings to finding new lenses to help you make sense of the complex world we live in. This newsletter is a collection of patterns we’ve noticed in recent weeks.

“There was something heartbreakingly beautiful about the lights of distant ships, I thought. It was something that touched both on human achievement and the vastness against which those achievements seemed so frail. It was the same thing whether the lights belonged to a caravel battling the swell on a stormy horizon or a diamond-hulled starship which had just sliced its way through interstellar space.”

— Alastair Reynolds, Chasm City

📝 Editor’s note: We’ll be off next week for the US’s July 4th holiday. We’ll be back the week after!

🎛️🎶 Tuned, not tamed

In 1998, Cher’s “Believe” introduced the world to Auto-Tune as an intentional audible effect. What began as a pitch-correction tool evolved into a creative signature, influencing the music industry for years.

We can look at generative AI through a similar lens. LLMs smooth rough edges, polish phrasing, and generate text that feels clean, fluent… and increasingly indistinct. Give image generators a simple prompt, and they will create something both pretty and banal. Used thoughtlessly, these tools pull expression toward the average. However, that’s not the whole story: used effectively, they can become another valuable tool in our creative toolbox. They reliably raise the floor, bringing more people up to a baseline of proficiency. They can also raise the ceiling, but it requires skill, iteration, and intentionality to avoid crashing into said ceiling.

Generative AI can flatten to the average. It brings fast iteration, widens access, and shifts our preferences—all of which shape what we produce, how we produce it, and how it lands with our audience.

Averaging flattens. Just as Auto-Tune can be used to lock pitch to equal temperament, LLMs pull phrasing toward statistical norms. The result can be language that sounds “right” but lacks soul. In the process, it becomes harder to distinguish the extraordinary from the merely excellent. The middle rises, but the top blurs—unless the user pushes the tool beyond its defaults.

Fast iteration accelerates everything. Auto-Tune turned studio days into instant retakes. LLMs do the same for drafting: endless rephrasings at near-zero cost. It’s liberating, but it can encourage shallow thinking when speed takes precedence over depth.

Widened access democratizes polish. Auto-Tune enables bedroom producers to create chart-ready tracks. LLMs let non-writers produce professional-grade text. That’s powerful. The flip side is that when proficiency becomes a commodity, individual voice and style get lost in the noise, and you can no longer rely on polish as a proxy for the quality of the underlying thinking.

Aesthetic shift turns this into a feedback loop. Auto-Tune didn’t just fix voices—it became a sound. LLMs are doing the same: creating a recognizable “AI-tone”—clean, competent, eerily neutral. Whether one embraces it or fights against it, the aesthetic of out-of-the-box generative AI is now part of our creative process.

This can be incredibly powerful, but it has a cost. As more model-generated text enters the training stream, we face a recursive flattening—LLMs learning from themselves, reinforcing the median and erasing the margins.

There’s hope that these tools may be used to amplify rather than just average—augmenting us when used with purpose. In that world, LLMs become creative collaborators, not just compressors. They turn us into centaurs. But that hope carries a shadow. What if the tools don’t just elevate, but eventually eclipse us? What if, like a hypothetical Auto-Tune that sings better than any human ever could, LLMs someday outperform us, not by helping us improve, but by rendering us unnecessary?

That’s a tension behind these tools: they amplify whatever posture we bring to them. Passive use trends toward flattening. Intentional use can stretch toward augmentation. The future depends on how we engage with these models… and on how we help them evolve.

For many of us, the question isn’t whether to use these tools—it’s whether we can do so without being absorbed by them. Retaining variety and expression requires intent, whether it’s intentional imperfection, pushing these tools to their extremes, or staying rooted in living cultural traditions.

🛣️🚩 Signposts

Clues that point to where our changing world might lead us.

🚏☀️ Solar panels and microgrids are helping Puerto Rican towns avoid blackouts

Puerto Rico’s centralized electrical grid is notoriously unreliable due to decades of underinvestment and poor maintenance. But when a blackout hit the whole island in April, the lights in the town of Adjuntas stayed on. Adjuntas has a lot of rooftop solar power, and they worked with the Oak Ridge National Laboratory to interconnect the town’s microgrids so they can exchange power with each other in case one goes down or local demand spikes. The resulting decentralization and redundancy provide resiliency against outages on the main grid. (Another impressive part is the town’s bottom-up adoption of solar, even as the federal government is redirecting Puerto Rico’s solar power funding to the fossil fuel-powered main grid.)

🚏🤑 Buy-now-pay-later loans will be incorporated into credit scores

FICO, a major provider of consumer credit scores in the US, will launch two new scores that incorporate BNPL loans. Similarly, the BNPL company Affirm announced earlier this year that it’d start reporting its short-term loans to Experian, a credit reporting agency. (This rhymes with news that Fannie Mae and Freddie Mac will now consider crypto assets when assessing risk for mortgage loans.)

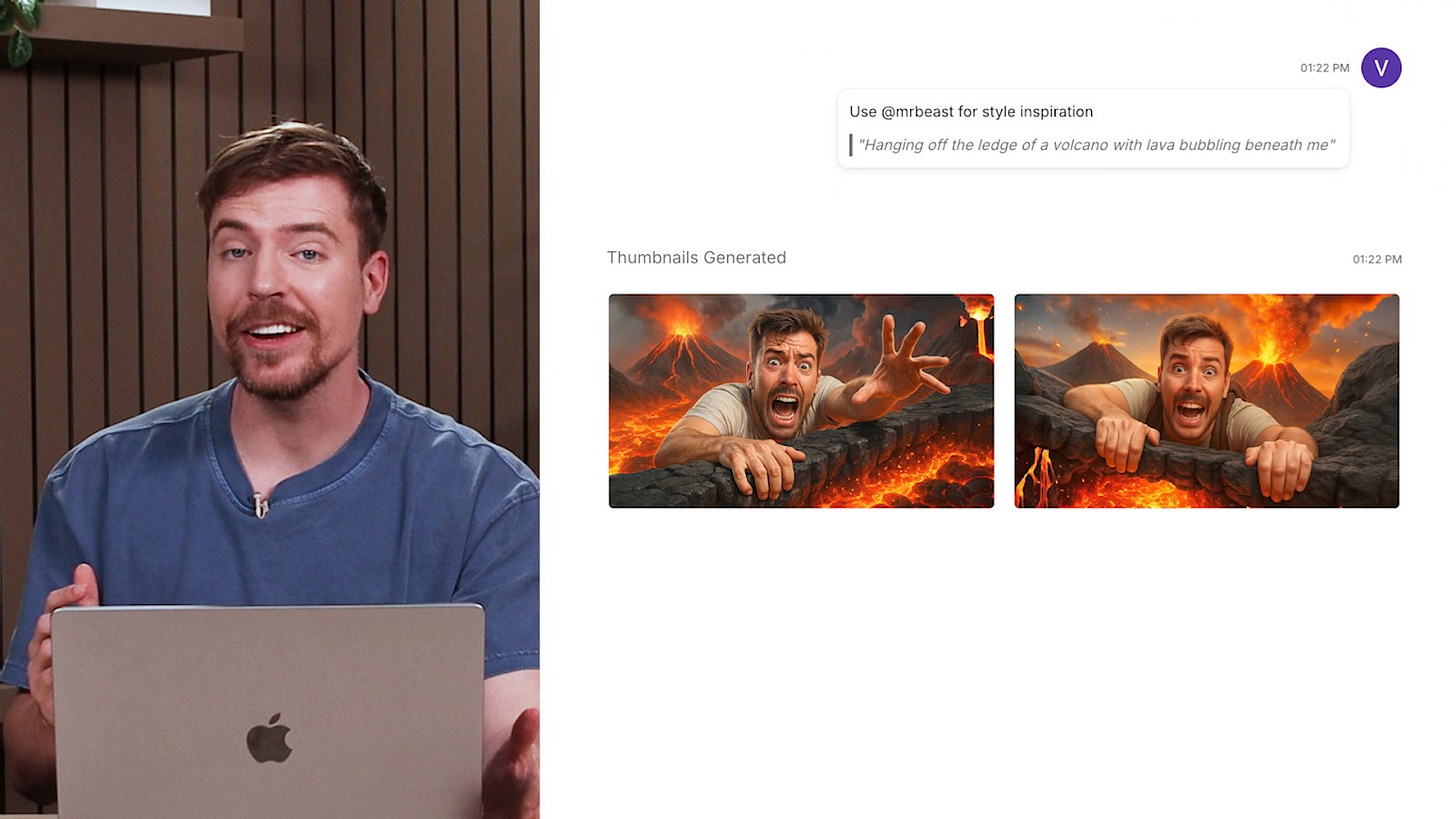

🚏🎞️ MrBeast is launching an AI YouTube thumbnail generator

The popular YouTuber’s startup, ViewStats, is launching an AI tool that’ll let content creators generate video thumbnails in the typical ‘clickbaity’ YouTube style. His tool can also generate thumbnails inspired by any specific YouTuber or swap the user’s face into an existing picture. Those features have drawn controversy that he’s enabling plagiarism, and MrBeast pledged to make changes, like only letting you face swap onto your own thumbnail, not others’.

🚏🇻🇳 Vietnam is ending its two-child policy in response to falling birthrates

Falling birthrates aren’t just affecting Europe; Vietnam’s birth rate has declined to 1.91 children per woman (down from 2.11 in 2021), which is below the global replacement level of approximately 2.3. So, Vietnam has cancelled its decades-old policy that limited families to two children, and in fact, it’s started giving financial rewards to women who have two children. China famously ended its one-child policy in 2016 and has since raised the cap to three children, but its population continues to fall.

📖⏳ Worth your time

Some especially insightful pieces we’ve read, watched, and listened to recently.

The Real Cost of Open-Source LLMs (Artificial Intelligence Made Simple) — Describes in detail how “open-source LLMs are not free — they just move the bill from licensing to engineering, infrastructure, maintenance, and strategic risk.” While ChatGPT or Claude are just an API call away, self-hosting even a modest-sized LLM can cost upwards of $500,000 a year, not to mention the opportunity cost of employing talented engineers who aren’t working on value-adding products.

Multiplayer AI Chat and Conversational Turn-Taking (Interconnected) — Matt Webb shares what his team learned from building prototype chat rooms that include both humans and multiple AI bots, each with a different skillset or personality. One surprisingly tricky part was deciding when a bot should speak up; that required studying linguistics papers to understand the subtle norms behind conversational turn-taking.

How Smart Is an Octopus? (The Atlantic) — Dives into the fascinating world of octopuses, whose minds and eyes evolved in a parallel evolutionary tree yet resemble ours in surprising ways. Possessing a far more complex neural system than other mollusks (500 million versus 18,000 for a sea slug), is it possible that octopuses evolved such complexity to do specific tasks that require consciousness, like finding different ways of doing the same thing?

Casino Lights Could Be Warping Your Brain to Take Risks, Scientists Warn (Science Alert) — Shares a study that found that blue light makes people less sensitive to financial losses, which makes them more likely to take risks and gamble. (No wonder, then, that casinos and gambling apps alike bathe players in blue light!) Certain ganglial cells that “play an important role in motivation and sensitivity to reward” are known to “respond preferentially to blue light,” so the mechanism is plausible.

🔍🦎 Lens of the week

Introducing new ways to see the world and new tools to add to your mental arsenal.

This week’s lens: pace layer atavism.

In biology, atavism refers to the recurrence of ancestral traits, such as vestigial legs on a snake. Pace layers represent how different layers of civilization evolve at different speeds; under normal conditions, slower layers provide the foundation that allows faster layers to move quickly.

However, when changes occur too quickly, we become caught in turbulence caused by the interference patterns of the lower layers. When the world changes faster than we can absorb, we reach down into the deeper, slower strata. We bring old familiar patterns back to life. This is pace layer atavism. This is more than nostalgia. Pace layer atavism is a re-expression of deeply embedded forms that have lain dormant.

The interactions between layers can be dramatic. It’s like plate tectonics: when fast-moving surface layers collide with deeper strata, there’s friction, turbulence, and pressure. The result is geologic shift: mountain ranges, subduction zones, eruptions.

In human systems, the interference patterns between fast and slow layers create hotspots of innovation, resistance, or regression. Decades of economic acceleration hollowed out traditional communities, giving rise to strongman politics and tribal allegiances. Rapid digital transformation happened alongside a revival of artisanal crafts and local food movements. The neoclassical revival in architecture came alongside the Industrial Revolution.

When novelty overwhelms, we often revert to what once worked. This buys us valuable time to process change. The risk arises when atavism overshoots. What begins as adaptive retreat can calcify into maladaptive entrenchment. Traditional values harden into fundamentalism. Technological skepticism curdles into conspiracy. Yearning for community devolves into in-group purity and out-group exclusion.

When it works well, we don’t return to the past—we spiral through it looking for a productive synthesis. We find the elements of the old that still make sense with the new and adapt. Modern maker culture isn’t traditional craftsmanship. A neoclassical building differs significantly from the Greek or Roman architecture that inspired it.

The task isn’t to resist the undertow—it’s to surf it wisely.

© 2025 The FLUX Collective. All rights reserved. Questions? Contact flux-collective@googlegroups.com.