🌀🗞 The FLUX Review, Ep. 193

June 5th, 2025

Episode 193 — June 5th, 2025 — Available at read.fluxcollective.org/p/193

Contributors to this issue: Ade Oshineye, Dart Lindsley, Erika Rice Scherpelz, Neel Mehta, Boris Smus, MK

Additional insights from: Alex Komoroske, Ben Mathes, Chris Butler, Dimitri Glazkov, Jasen Robillard, Jon Lebensold, Julka Almquist, Justin Quimby, Kamran Hakiman, Lisie Lillianfeld, Melanie Kahl, Robinson Eaton, Samuel Arbesman, Scott Schaffter, Spencer Pitman, Wesley Beary

We’re a ragtag band of systems thinkers who have been dedicating our early mornings to finding new lenses to help you make sense of the complex world we live in. This newsletter is a collection of patterns we’ve noticed in recent weeks.

“In most people’s vocabularies, design means veneer. But to me, nothing could be further from the meaning of design. Design is the fundamental soul of a man-made creation that ends up expressing itself in successive outer layers.”

— Steve Jobs

🤖🧠 Of augments and avatars

In any app, a user might interpret their interactions with a large language model through the lens of personhood or the lens of augmentative tools. This isn't about consciousness or AGI or the definition of intelligence. It’s a matter of perception: whether a brand aims to be “friendly” or “professional.” In other words, does the app want you to think that the computer is a person?

Some applications, such as dating apps or therapeutic chatbots, set up an implicit expectation of personhood. In the personhood affordance, the LLM often fills a role that traditionally requires human qualities, such as emotional connection, nuanced judgment, or accountability. Sixty years ago, ELIZA utilized this personhood affordance (in a way that quickly revealed its limitations). This affordance also appears in robotics, from real robotic dogs to fictional plastic pals that are fun to be with.

But there’s a tension here. IBM long ago cautioned that “A computer can never be held accountable; therefore, a computer must never make a management decision.” If an LLM presents an affordance of personhood, the question of its capacity for accountability (or lack thereof) becomes central to its perceived effectiveness and ethical use. It’s not just about management decisions. It's about any domain where accountability—and the nuanced, often messy, reality of human interaction—is key. An LLM can't be fired. It can't feel remorse. It can't understand (or be held accountable for) the long-term impact of a poorly worded dismissal email.

On the flip side are LLM applications with a tool affordance. The LLM serves as a powerful augment rather than a simulated human. For example, an LLM might scan internal communications and suggest people with shared interests. You may use it to generate and refine documents. You might summarize complex information or distill key themes from large volumes of data.

In these cases, the value of the LLM comes from offloading routine tasks, providing rapid first passes, or highlighting patterns that would take a human far longer to discern. It frees up the user to focus on higher-level goals such as social connection, strategy, interpretation, and action. It’s not about social empathy or judgment. And critically, the LLM is not accountable for the final outcome: the human is.

Augmentative tools are certainly less fraught, but some problems need a more person-like touch. However, if the utility you are pursuing leans toward a personhood affordance, the difficulty increases significantly. A chatbot to keep lonely people company can lead to terrible outcomes (trigger warning: self-harm).

This doesn’t mean you can’t use LLMs to do things that a person currently does. However, consider how to make the affordances lean in the direction of augmentative tools rather than personhood. For example, how an AI therapist uses language matters. Does it come across as a person, using “I” and “myself”, or does it come across as more of a disembodied source of advice?

> User: I’m feeling very anxious at work. I’m worried everyone thinks I’m failing

> System [personhood affordance]: I understand that feeling; it's tough when you're caught in a spiral of self-doubt and worry about how others perceive your efforts. I talk with loads of people and I wonder if they think I’m giving them good responses.

> System [tool affordance]: Anxiety at work is very understandable and common. You’re not alone in that. Most people over-focus on what people think of them, even though most of the time, everyone else is also so busy thinking about what other people think of them that they aren’t thinking about you. But the worry that someone might be thinking something bad is hard to drop.

Norms (and models) will continue to evolve. However, design always requires interpretation. How a system is interpreted shapes everything from user expectations to ethical risk. You don’t have to solve the personhood vs. tool tension outright, but you do have to be aware of which signals your application is sending and adjust your design accordingly.

🛣️🚩 Signposts

Clues that point to where our changing world might lead us.

🚏🧑💻 A hot “AI” code-generation startup was actually 700 Indian engineers

A buzzy London-based startup called Builder.ai said it used AI to create software for customers; it was backed by Microsoft and picked up a $1.5 billion valuation. But it was recently revealed that there was little AI involved at all: hundreds of human engineers in India wrote code based on customer requests, and the company simply marketed their output as AI-generated. The company filed for bankruptcy shortly after the news broke, and it was further revealed that the startup had inflated revenue projections by 300% and incurred nearly $400 million in unpaid cloud computing fees.

🚏🏢 More US office space is being removed than added for the first time in 25 years

For the first time in 25 years, the total amount of office space in the US is expected to decline. This year, across the top US markets, 23.3 million square feet of office space is slated to be demolished or converted to other uses (such as multi-family residential). In comparison, just 12.7 million square feet will be created. Remote work is obviously a factor; office vacancies have declined somewhat since the pandemic, but remain high at 19%. But demand is increasing as many employers are enforcing RTO mandates (and in a tight labor market like this, employees don’t have much choice), and combined with the reduced supply, office rents are expected to start increasing.

🚏🍊 Americans are spending relatively less on groceries than ever before

The volume of personal grocery expenses in the US has been increasing steadily for decades; it surged during the COVID-19 pandemic and has remained at a high level. But while total expenditures are high, groceries have gotten relatively cheaper compared to other goods, and they now represent a smaller portion of overall US consumer expenses than ever. Consumers in the ‘60s spent almost 20% of their budget on groceries; that figure is now down below 8%. (The denominator dropped during COVID as people spent less on everything else, driving a spike in the graph, but the trend is now back to normal.)

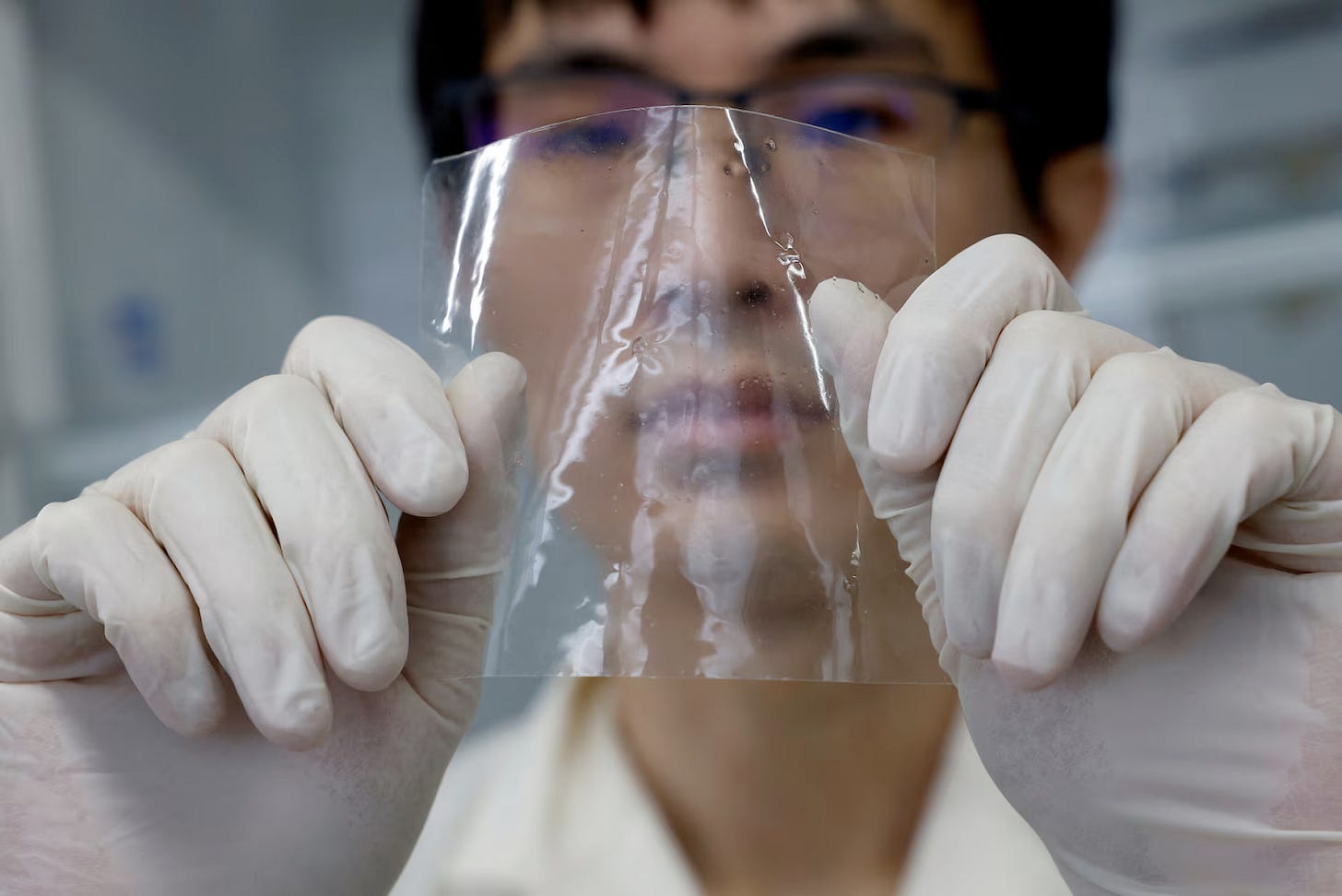

🚏🧂 Scientists developed plastic that dissolves in seawater

Researchers in Japan have created plastics that are “as strong as petroleum-based plastics” but break down into their basic components in seawater in just a few hours; they’re non-toxic and don’t release microplastics. The trick is that plastics dissolve in salt, which also means they can dissolve in the ground, as soil contains salt as well. Their components can be digested by naturally occurring bacteria.

📖⏳ Worth your time

Some especially insightful pieces we’ve read, watched, and listened to recently.

The Hidden Time Bomb in the Tax Code That’s Fueling Mass Tech Layoffs (Quartz) — Observes that, since the 1950s, American companies “could deduct 100% of qualified research and development spending in the year they incurred the costs” from their taxes—meaning that engineers building tech products effectively cost a lot less. However, in the 2017 tax bill, lawmakers attempting to offset tax cuts elsewhere delayed this provision, making it take effect in 2022. Perhaps it’s now less of a surprise that tech layoffs started exploding in 2023.

Chronos and Kairos in Strategic Decision Making (The Uncertainty Project) — Explores how business planning relates to two Greek words for time: chronos (regular wall-clock time) and kairos (“a critical moment of opportunity”). Chronos equals detailed planning cycles and disciplined execution, while kairos involves adapting quickly to new opportunities while bypassing traditional processes. Leaders need to switch between the two modes based on the levels of uncertainty, urgency, and opportunity facing their organization.

I Trained With Russian Diplomats. I Can Tell You How They Work. (The Moscow Times) — A look into how Russian diplomats are trained in MGIMO, Russia's elite diplomatic academy. Students bow to the deities of geopolitical realism like Morgenthau, Waltz, Primakov, and Mearsheimer, for whom “power is truth, might makes right, and ‘spheres of influence’ are gospel.”

The Scammer’s Manual: How to Launder Money and Get Away With It (The New York Times) [Archived] — Examines the ecosystem of criminals involved in the infamous ‘pig butchering’ scams: human traffickers who make their victims scam people abroad, money launderers and their ‘mules,’ and the shady internet marketplaces (often arranged on Telegram) that connect scam bosses and launderers.

🔍📆 Lens of the week

Introducing new ways to see the world and new tools to add to your mental arsenal.

This week’s lens: Machiavellian empathy.

Two senior team members are locked in conflict. Both sharp, both principled. Both technically right… and both fed up with the other. Meetings twist into sparring matches. Slack threads get tense. The manager looks at the feelings involved and sees what each needs: one to work toward promotion, the other to avoid repeating a past mistake. He restructures the conversations to help each get what they need. Not only because he cares deeply, but because ignoring the tension would damage the team and the business.

This is Machiavellian empathy: using emotional awareness as part of strategic reasoning. Although Niccolò Machiavelli is often associated with manipulation, he was a pragmatist (including shifting his philosophy to write The Prince… after he was tortured and exiled by the people he would come to work for). While Machiavellian empathy can be used to manipulate and achieve selfish ends, it can also be used to account for emotional reactions as a core part of system dynamics.

You don’t use this lens to be nice. You use it because ignoring emotion breaks things. Feelings drive uptake, resistance, trust, and timing. Model them poorly, and even your best ideas will fail to land. Model them well, and it can help hold a system together under pressure.

That doesn’t mean that you aren’t nice if you use this lens. For example, it can help you understand why a mission-focused employee might be insulted by a financially lucrative counteroffer. Understanding how people will react allows you to better take their desires into account (although it doesn’t force it).

However, the very need for that caveat reveals an important tension. Machiavellian empathy sacrifices moral simplicity. You’re not just doing the “right thing”—you’re calculating how others will interpret it. You’re asking: what will feel fair? What will signal loyalty? What reaction am I provoking? Even if your aims are aligned and ethical, the act of modeling strips away the innocence of acting on pure virtue. If you avoid betrayal because the fallout would be too expensive, is that loyalty or risk management? It’s virtue ethics vs consequentialism… and consequentialism can feel a bit calculating and unfeeling.

But this nuance is necessary. In small, high-trust circles, you might get by on instinct. But in large, layered, emotionally plural systems, instinct alone fails. You need inference. You need second-order thinking. And you need to see emotion not as noise, but as signal.

Virtue ethics—doing the right thing because of your moral character—makes a strong daily operating system. It’s fast, humane, and keeps you grounded. But when the stakes rise—when decisions impact many people differently, have far-reaching second and third-order effects, or close off future paths—that’s where Machiavellian empathy becomes critical. It’s not a replacement for virtue. It’s a recognition that in complex environments, impact eclipses intention.

Machiavellian empathy asks you to consider what others will feel—and to build that into your reasoning. Whether that leads to alignment or manipulation depends on your goals and your values. But pretending emotion doesn’t shape systems? That’s not principle. That’s denial.

© 2025 The FLUX Collective. All rights reserved. Questions? Contact flux-collective@googlegroups.com.