🌀🗞 The FLUX Review, Ep. 165

October 10th, 2024

Episode 165 — October 10th, 2024 — Available at read.fluxcollective.org/p/165

Contributors to this issue: Dart Lindsley, Erika Rice Scherpelz, Spencer Pitman, Justin Quimby, Ade Oshineye, Wesley Beary, Boris Smus, Jasen Robillard, Neel Mehta, MK

Additional insights from: Ben Mathes, Dimitri Glazkov, Alex Komoroske, Robinson Eaton, Julka Almquist, Scott Schaffter, Lisie Lillianfeld, Samuel Arbesman, Jon Lebensold, Melanie Kahl, Kamran Hakiman, Chris Butler,

We’re a ragtag band of systems thinkers who have been dedicating our early mornings to finding new lenses to help you make sense of the complex world we live in. This newsletter is a collection of patterns we’ve noticed in recent weeks.

“Wanderer, there is no road. The road is made by walking.”

― Antonio Machado

🌀🧭 Lost in confusion

In the early stages of the COVID-19 pandemic, many leaders believed the problem could be solved through expert analysis and well-defined processes. They treated the situation as complicated, to use the term from the Cynefin framework. However, many parts of reality were more complex, with evolving variables, unpredictable human behavior, tribal identification, and uncertain outcomes. Such misclassification led to delayed or ineffectual responses and inadequate measures as leaders struggled to adapt to a situation that defied easy categorization.

We were in the realm of confusion, which lies in the center of the Cynefin framework. In this space, clarity is absent, and it’s unclear which of the other Cynefin domains applies (complex, complicated, chaotic, or clear). This domain embodies uncertainty about uncertainty itself.

One of the most significant risks from the confusion domain is misclassification. Treating the complex phenomenon of the COVID-19 pandemic as complicated is a prime example. When we misclassify a complex problem as complicated, we treat it as one that can be solved with data and engineering rather than as a situation requiring high adaptability, experimentation, and learning in the face of rapid change.

Misclassification can occur in various scenarios. You might believe you’re dealing with a clear situation where cause and effect are well understood, but the reality could be far more complicated, complex, or even chaotic. Conversely, treating something as complex when it’s clear can lead to massively overwrought solutions for simple problems. Getting the classification wrong can result in missed opportunities to find more effective approaches to the issues in front of us.

The confusion domain challenges us to confront the unsettling reality that our assumptions and cognitive biases can—and more often than not, will—lead us astray. It challenges us to consider not just where we are, but also how we might be wrong about where we are. This layer of self-reflection—this meta-classification—adds a new dimension to the Cynefin framework, encouraging careful examination of our initial conclusions.

The confusion domain reminds us that sometimes, the first step to clarity is acknowledging that we might not yet—or ever—fully understand the situation, and that’s okay. By staying open to the possibility of being wrong, we can better adapt, learn, and ultimately find our way through.

🛣️🚩 Signposts

Clues that point to where our changing world might lead us.

🚏🗳️ Almost 3 million Americans have now voted early

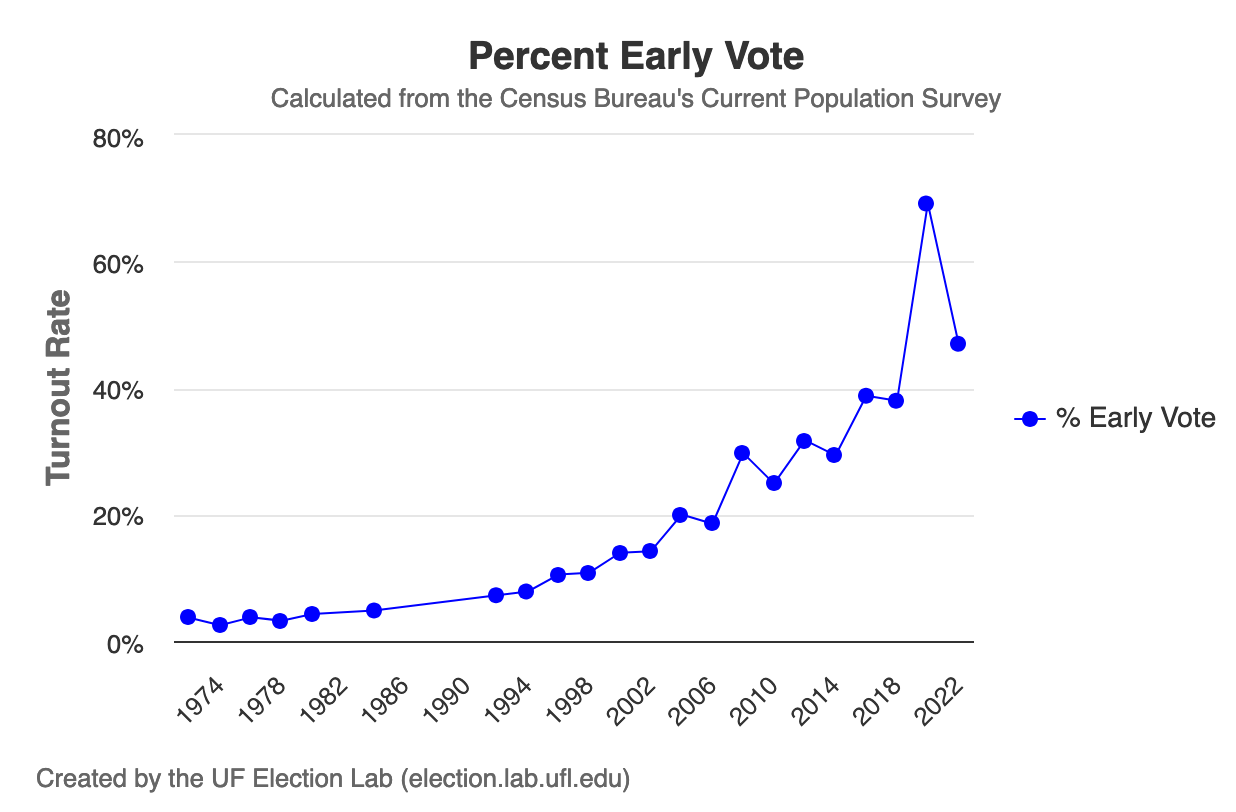

Early voting (both mail-in voting and early in-person voting) is in full swing for the US’s November 2024 elections, and the excellent University of Florida Election Lab has found that over 2.9 million voters have already cast an early ballot. This is less than the 9 million people who had voted early by this point in the 2020 election, but much of that was COVID-induced mail-in voting. The long-term trend appears strong, though: since the 1970s, more and more people have been voting early.

🚏🕴️ AI bots will let you auto-apply to thousands of jobs on LinkedIn

A popular new Python script will automatically crawl through LinkedIn’s job board for you and use an LLM to submit personalized job applications, including auto-written resumes and cover letters. Users have reported applying to hundreds of jobs an hour, with one user sending over 2800 submissions; they’ve seen a lot more success getting interviews than when applying manually. The script’s creator says it’s a way to fight back against companies that use AI to screen applications (indeed, this would result in AIs talking to other AIs, with no humans in the loop).

🚏🚪 Chinese hackers used a backdoor to hack major American ISPs

A team of hackers backed by the Chinese government recently hacked three large US-based broadband providers, and experts think they exploited ‘backdoors’ that were originally built into the encryption software to enable (legal) wiretapping requests by law enforcement. As the outlet 9to5Mac put it, “The moment you build in a backdoor for use by governments, it will only be a matter of time before hackers figure it out… You cannot have an encryption system which is only a little bit insecure any more than you can be a little bit pregnant.“

🚏👾 The White House made a Reddit account to talk about hurricanes amidst misinformation

The President’s office has started posting on Reddit under its official “whitehouse” account; it started threads on the r/NorthCarolina and r/Georgia forums to discuss the federal response to hurricanes Helene and Milton. It’s an unconventional way for the government to spread its message, but it’s an understandable reaction to the misinformation and conspiracy theories that’ve been swirling online about FEMA, the agency that coordinates disaster response.

📖⏳ Worth your time

Some especially insightful pieces we’ve read, watched, and listened to recently.

Why A.I. Isn’t Going to Make Art (New Yorker) — Science fiction author Ted Chiang (also known for his essay “ChatGPT Is a Blurry JPEG of the Web”) poses the question: can you have creativity without an inner life? His implicit answer is an emphatic no, but that does not mean that new AI-based tools won’t empower artists to reach new heights.

Magicians Wouldn’t Be Engineers (Bret Devereaux) — The historian behind the popular blog ACOUP disagrees with the common idea that, if magic were real, its rules would be fully determined and systematized, and its practitioners would effectively be scientists. For most of human history, “physics itself was a ‘soft’ magic system”: people knew what worked but had no idea how it worked. Consider the medieval blacksmith who had no idea how metals’ atomic structures worked, but could forge a sword anyway with “craft knowledge.” This pattern is reminiscent of James C. Scott’s techne and metis.

How to Get Rich in Tech, Guaranteed (Startup L. Jackson) — Observes that Big Tech is a reliable way to get rich, but “startups are the only way to get 20 years of experience in five.” The author’s advice is simple: find a startup run by high-integrity, smart, and hard-working people with a compatible culture, and sprint toward the milestone the company needs to get to the next round!

Shitposting, Shit-Mining and Shit-Farming (Programmable Mutter) — Argues that a little bit of shitposting (silly snarky posts) helps social media platforms, but if platforms like Twitter/X let such low-quality posts take over, they quickly decay into “shit-mining” (trying to monetize data, like when Twitter shut off its public API) and “shit-farming” (finding people who like being fed junk content and selling their attention to grifters).

📚🛋️ Book for your shelf

A book that will help you dip your toes into systems thinking or explore its broader applications.

This week, we recommend Why Greatness Cannot Be Planned: The Myth of the Objective by Kenneth O. Stanley and Joel Lehman (2015, 104 pages).

In this instant classic, the authors challenge the belief that ambitious objectives are the key to innovation. Instead, they argue that true breakthroughs come from exploring indirect paths—what they call "stepping stones”. The book pushes against conventional wisdom, suggesting that the pursuit of clear objectives and plotting direct path forward often blinds us to unexpected opportunities along the way

The key insight here is the idea of "novelty search," which values curiosity and exploration over rigid goal-setting. Innovation, they argue, thrives on detours and local progress, and using objectives as benchmarks for success can cause us to miss the necessary deviations that lead to real breakthroughs. By focusing too narrowly on reaching an end goal, we risk overlooking the value of wandering, where the most transformative discoveries often occur.

Although generative AI hadn’t yet gone mainstream when this book was published in 2015, it illustrates exactly the tension the authors describe. AI accelerates the process of exploring ideas and surfacing novel connections, enabling faster, deeper exploration. But as the book warns, optimizing for clear objectives can sometimes skip the meandering, trial-and-error processes that lead to serendipitous discoveries. The challenge today is not whether AI is creative, but how we balance its efficiency with the kind of open-ended exploration that drives human innovation—the very wandering that Stanley and Lehman highlight as crucial for uncovering hidden stepping stones.

This book is a worthwhile read because it invites us to rethink how we approach innovation, encouraging openness to exploration over rigid goals—a lesson that feels especially relevant as AI reshapes how we navigate creativity and discovery.

© 2024 The FLUX Collective. All rights reserved. Questions? Contact flux-collective@googlegroups.com.